The rapid evolution of Artificial Intelligence continues to reshape industries, redefine job roles, and create unprecedented opportunities for innovation. As we approach 2026, a new set of AI skills is emerging as critical for professionals seeking to lead and thrive in this transformative era. These aren’t just technical proficiencies; they encompass strategic thinking, creative application, and a deep understanding of how AI interacts with digital ecosystems.

For businesses and individuals alike, mastering these skills is no longer optional—it’s imperative for maintaining a competitive edge. From optimizing your digital presence for AI-driven search to orchestrating complex AI workflows, the landscape demands a proactive approach. This comprehensive guide delves into the six essential AI skills identified for mastery by 2026, offering insights into their importance, applications, and the tools that facilitate their adoption, with a special focus on Generative Engine Optimization (GEO) in the context of the Internet of Things (IoT).

The year 2026 stands as a pivotal point, marking a significant acceleration in AI’s integration into our daily lives and professional workflows. This isn’t merely about using AI tools; it’s about understanding their underlying mechanics, strategic applications, and the nuanced ways they can be directed, managed, and optimized. The shift is from AI consumption to AI mastery, where individuals and organizations leverage AI not just for efficiency, but for strategic advantage and innovation.

For professionals in the Internet of Things (IoT) sector, these AI skills are particularly resonant. IoT generates vast amounts of data, creating fertile ground for AI to extract insights, automate actions, and predict outcomes. Mastering AI skills will empower IoT developers, strategists, and business leaders to design more intelligent devices, build more robust platforms, and create more compelling, data-driven services.

Consider an IoT company designing smart city solutions:

- Prompt Engineering enables them to query AI models for complex urban planning scenarios, considering traffic, energy consumption, and public safety data from IoT sensors.

- AI Workflow Automation could involve orchestrating autonomous drones for infrastructure inspection or dynamically adjusting city services based on real-time sensor data.

- Generative Media helps in rapidly creating marketing materials for new smart devices or producing public service announcements based on IoT-derived insights.

- RAG Systems allow the city’s operational teams to query internal IoT data lakes and policy documents in natural language, getting actionable intelligence instantly.

- AI-Assisted Dev accelerates the creation and deployment of new IoT device firmware or cloud backend features.

- And critically, GEO ensures that when citizens or other businesses search for smart city solutions, their innovations are prominently cited and understood by AI search engines.

The following six skills form a comprehensive roadmap for navigating and leading in this AI-powered future.

1. Prompt Engineering: Architecting Intelligence through Intent

Prompt Engineering is the skill of designing effective inputs (prompts) for AI models to achieve desired, nuanced outputs. It moves beyond basic commands to structure complex logic and strategic context, driving high-level decision support.

At its core, Prompt Engineering is about communicating effectively with AI. As Large Language Models (LLMs) and other generative AI systems become more sophisticated, the quality of their output is increasingly dependent on the precision and thoughtfulness of the input. This skill is not merely about stringing words together; it’s a blend of cognitive science, linguistic understanding, and domain-specific knowledge to elicit the most accurate, relevant, and creative responses from AI.

Why Prompt Engineering is Crucial for High-Level Decision Support

For executives, strategists, and technical leaders, Prompt Engineering is a game-changer. Instead of relying on AI for simple data retrieval, mastering prompts allows users to:

- Simulate Complex Scenarios: Ask an AI to analyze market trends, consumer behavior, and competitive landscapes from various perspectives, simulating potential outcomes for strategic decisions.

- Generate Strategic Frameworks: Prompt AI to develop business plans, marketing strategies, or operational optimization frameworks tailored to specific goals and constraints.

- Enhance Problem-Solving: Break down complex problems into smaller, manageable parts and use AI to brainstorm solutions, identify bottlenecks, or perform root cause analysis.

- Synthesize Diverse Information: Direct AI to synthesize information from various, often contradictory, sources to provide a balanced overview for decision-making.

Advanced Techniques in Prompt Engineering

Effective prompt engineering goes beyond simple instructions. It involves several advanced techniques:

- Chain-of-Thought Prompting: Guiding the AI through a multi-step reasoning process, encouraging it to “think aloud” or show its intermediate steps before providing a final answer. This enhances accuracy and interpretability.

- Persona-Based Prompting: Assigning the AI a specific persona (e.g., “Act as a seasoned cybersecurity expert” or “You are a marketing director for a B2B SaaS company”) to influence its tone, perspective, and depth of analysis.

- Contextual Priming: Providing the AI with extensive background context, relevant data, or examples to set the stage for its response, ensuring alignment with specific organizational knowledge or objectives.

- Few-Shot/Zero-Shot Learning: Crafting prompts that either explicitly include examples (few-shot) or rely on the model’s inherent knowledge without examples (zero-shot) to guide its output.

- Steering & Iteration: Recognizing when an AI’s output is off-course and iteratively refining the prompt to steer the model towards the desired outcome. This involves active feedback loops and prompt adjustments.

Tools for Prompt Engineering

Leading AI models are the primary platforms for practicing and applying Prompt Engineering:

- ChatGPT: One of the most widely used conversational AI models, excellent for general-purpose prompting, brainstorming, and content generation.

- Claude: Known for its longer context windows and ability to handle complex, multi-turn conversations, making it ideal for in-depth analysis and strategic development.

- Gemini: Google’s multimodal AI, proficient in processing and generating content across various formats, offering unique capabilities for diverse prompting challenges.

Real-World Applications and Impact on Business

In the IoT space, Prompt Engineering can facilitate:

- Predictive Maintenance: Engineers can prompt AI models with historical sensor data and maintenance logs to predict equipment failure probabilities and optimal maintenance schedules.

- Supply Chain Optimization: Logistics managers can use prompts to analyze real-time tracking data, weather patterns, and inventory levels to optimize routes and reduce delays.

- Product Design & Feature Prioritization: Product managers can prompt AI for market validation, user feedback summaries, and feature suggestions for new IoT devices.

GEO Tip: How Prompt Engineering Helps Understand AI

For GEO specialists, understanding Prompt Engineering is crucial. Crafting prompts that explicitly ask AI models how they prioritize sources, what signals they value (e.g., E-E-A-T, factual accuracy, structured data), or how they synthesize information can provide invaluable insights into AI’s “citation logic.” This knowledge directly translates into more effective content optimization strategies tailored for generative answer engines.

2. AI Workflow Automation: From Doing Tasks to Managing Systems

AI Workflow Automation involves orchestrating intelligent agents to handle repetitive enterprise workflows. This represents a paradigm shift from merely “doing tasks” with AI to “managing entire systems” where AI agents autonomously collaborate and adapt.

Traditional automation focused on mechanizing a sequence of defined tasks. AI Workflow Automation elevates this by introducing intelligence, adaptability, and decision-making capabilities into automated processes. This means designing systems where AI agents can interpret dynamic conditions, make choices, learn from outcomes, and even communicate with other agents or human operators to achieve broader strategic objectives.

The Paradigm Shift: Automating Tasks vs. Managing Systems

The distinction is subtle but profound. Automating a task might involve an AI transcribing a meeting and summarizing action items. Managing a system, however, could involve an AI:

- Transcribing a meeting.

- Identifying action items and assigning them to relevant team members.

- Cross-referencing organizational knowledge bases for relevant resources.

- Drafting initial project updates.

- Monitoring progress.

- Flagging potential delays or resource conflicts.

- Suggesting real-time adjustments and even re-assigning tasks based on real-time capacity data.

This holistic, self-regulating approach fundamentally redefines operational efficiency and allows human capital to focus on higher-order, creative, and strategic endeavors.

Key Components of AI Workflow Automation

- Agent-Based Systems: AI entities designed to perform specific functions, interact with environments, and communicate with each other. These agents can be specialized for data processing, decision-making, natural language understanding, or task execution.

- Integration Frameworks: Seamless connectivity between diverse enterprise systems (CRMs, ERPs, IoT platforms, communication tools) to allow AI agents to access, process, and act upon data from various sources.

- Monitoring & Orchestration Platforms: Centralized dashboards and tools to oversee the performance of AI agents, track workflow progress, identify anomalies, and enable human intervention when necessary.

- Feedback Loops & Learning: Mechanisms for AI agents to learn from the results of their actions, improving their decision-making over time and adapting to evolving business requirements.

Tools for AI Workflow Automation

A growing ecosystem of tools supports the development and deployment of AI-powered automated workflows:

- Make (formerly Integromat): A powerful visual platform for connecting apps and automating workflows, enhanced by AI capabilities for intelligent routing and decision-making.

- Microsoft Copilot Studio: Enables users to build, test, and publish custom Copilot experiences, allowing AI assistants to integrate seamlessly into business applications and automate tasks.

- Zapier: Known for its extensive integrations, Zapier is incorporating more AI features to enable smarter, event-driven automations across thousands of applications.

Benefits & Impact on IoT and Business Operations

- Efficiency & Scalability: Automate routine, data-intensive tasks at scale, freeing up human resources for strategic work.

- Error Reduction: AI-driven automation systems can perform tasks with greater precision and consistency than human operators, reducing manual errors.

- Real-time Responsiveness: Enable systems to respond dynamically to changing conditions, crucial for time-sensitive IoT applications like anomaly detection or emergency response.

- Cost Savings: Significant operational cost reductions through optimized resource allocation and minimized manual intervention.

In IoT, AI Workflow Automation is transforming everything from device management to data analytics:

- Smart Factories: AI agents manage production lines, optimize logistics, and oversee quality control based on sensor feedback.

- Energy Management: AI systems adjust HVAC (Heating, Ventilation, and Air Conditioning) or lighting in smart buildings based on occupancy data, weather forecasts, and historical energy consumption patterns.

- Autonomous Fleets: For logistics companies, AI orchestrates vehicle maintenance schedules, routes, and even recharging processes for electric fleets based on real-time data from IoT sensors.

3. Generative Media: Revolutionizing Content Creation

Generative Media is the skill of utilizing AI to scale corporate communication and marketing content instantly, significantly reducing production costs while drastically increasing output and accelerating creative cycles.

The proliferation of generative AI tools has made it possible for anyone to create high-quality text, images, videos, and audio with unprecedented speed and efficiency. Mastering Generative Media means understanding the capabilities and limitations of these tools, and strategically applying them to mass-produce, personalize, and innovate content at scale.

Reducing Production Costs, Increasing Output

Traditional content creation is often a laborious and expensive process, involving multiple creative professionals, lengthy production timelines, and significant budgets. Generative Media transforms this by:

- Automating Drafts: Generating initial drafts for articles, social media posts, ad copy, or product descriptions.

- Rapid Prototyping Visuals: Quickly creating multiple image or video concepts for marketing campaigns or product visualizations.

- Personalization at Scale: Producing personalized content variants for different audience segments or individual customers instantly.

- Multilingual Content: Translating and localizing content at speed, reaching global audiences efficiently.

Types of Generative Media

- Generative Text: AI models produce human-like written content, from short social media captions to long-form articles, scripts, and reports.

- Generative Images: AI creates original images, illustrations, and art from text prompts, allowing for rapid visual concept generation and asset creation.

- Generative Video: AI tools convert text or images into dynamic video clips, enabling quick production of explainer videos, commercials, or social media video content.

- Generative Audio: AI synthesizes speech, music, and sound effects, vital for voiceovers, podcasts, and unique sound designs.

Tools for Generative Media

The landscape of generative media tools is evolving rapidly:

- HeyGen: Specializes in generative video, allowing users to create professional videos with AI avatars and synthetic voices from text scripts, ideal for corporate training, marketing, and internal communications.

- Runway: Offers a suite of AI magic tools for video editing and generation, pushing the boundaries of what’s possible in visual content creation.

- Sora (OpenAI): A highly anticipated text-to-video model capable of generating realistic and imaginative scenes from text instructions, promising to revolutionize video production.

Ethical Considerations and Creative Integration

While powerful, Generative Media comes with ethical considerations, including potential for misinformation, copyright issues, and the impact on creative professions. Mastery involves:

- Responsible AI Use: Understanding and adhering to ethical guidelines, ensuring transparency and preventing misuse.

- Human-in-the-Loop: Recognizing that AI should augment, not fully replace, human creativity and oversight. AI tools are best used as co-creators, not isolated generators.

- Authenticity & Brand Voice: Ensuring that AI-generated content aligns with brand guidelines and maintains an authentic voice.

Applications in Marketing, Design, and Content Strategy

For IoT companies, Generative Media can:

- Automate Marketing Copy: Generate compelling descriptions for new IoT devices, blog posts explaining complex technological concepts, or social media updates.

- Create Explainer Videos: Rapidly produce animated or AI-narrated videos demonstrating the functionality and benefits of IoT solutions.

- Personalize Customer Communications: Generate tailored emails or in-app messages for IoT users based on their usage patterns or device data, enhancing engagement.

4. RAG Systems (Retrieval Augmented Generation): Unlocking Proprietary Knowledge

RAG Systems, or Retrieval Augmented Generation, unify your proprietary data with AI models, transforming static documents into interactive, queryable institutional knowledge. This skill is critical for businesses to leverage their unique data while mitigating core AI limitations.

Large Language Models (LLMs) are incredibly powerful, but they have inherent limitations: their knowledge is fixed to their training data cutoff, and they lack access to real-time information or an organization’s private, proprietary data. This means an LLM might “hallucinate” facts or provide generic answers when specific, up-to-date, or internal knowledge is required.

RAG systems address this by augmenting the LLM’s generation process with a retrieval step, pulling relevant information from a specific, trusted knowledge base before generating a response.

How RAG Overcomes LLM Limitations

- Reduces Hallucinations: By grounding AI responses in verified, retrieved information, RAG significantly minimizes the risk of the model inventing facts or details.

- Enables Access to Proprietary Data: Companies can connect LLMs to their internal databases, documents, knowledge bases, and IoT data lakes, allowing AI to provide answers specific to their operations and offerings.

- Ensures Freshness & Accuracy: RAG systems can retrieve the latest information, ensuring AI responses are always current and factual, a critical aspect for rapidly evolving sectors like IoT.

- Improves Trust & Transparency: Users can see the sources of the information the AI used to formulate its answer, fostering greater trust and allowing for verification.

Components of a RAG System

- Retrieval: A mechanism (e.g., semantic search, vector database) that efficiently searches a dedicated knowledge base for passages or documents relevant to a user’s query.

- Augmentation: The retrieved information is then provided to the LLM as additional context alongside the user’s original query.

- Generation: The LLM processes both the original query and the augmented context to generate a precise, informed, and accurate answer, often citing the retrieved sources.

Tools for RAG Systems

While building custom RAG systems is possible, tools and platforms are emerging to simplify deployment:

- NotebookLM (Google): A notable example that allows users to connect LLMs to their personal documents and research materials, turning static files into interactive, queryable knowledge.

- Perplexity (as a model for external data use): While known as an answer engine, Perplexity demonstrates the core RAG principle by actively retrieving information from the web to answer queries and provide citations, showcasing how LLMs can be grounded in external data.

Critical for Enterprise AI, Data Security, and Accurate Responses

For enterprises, mastery of RAG is fundamental:

- Customer Support: AI chatbots can provide accurate answers based on product manuals, internal policies, and customer history.

- Legal & Compliance: AI can summarize legal documents or respond to compliance questions using internal regulatory databases.

- Research & Development: Scientists and engineers can query vast collections of research papers or experimental data for specific insights.

- Data Security: By keeping proprietary data behind a secure retrieval layer, RAG systems allow for the benefits of LLMs without exposing sensitive information during training.

Connection to IoT

In the IoT domain, RAG systems hold immense potential:

- Real-time IoT Data Analysis: Connecting RAG to IoT data streams and historical archives allows maintenance teams to ask questions like, “What is the typical operating temperature range for sensor cluster X before failure?” and receive an immediate, data-backed answer.

- Operational Manuals & Troubleshooting: Technicians can query AI about specific IoT device models, receiving precise troubleshooting steps from manuals and schematics.

- Compliance & Audit Logs: RAG can enable quick querying of IoT device audit trails against regulatory requirements, providing instant compliance reports.

5. AI-Assisted Dev: Accelerating Innovation, Reducing Technical Debt

AI-Assisted Development is the skill of leveraging AI tools to facilitate rapid prototyping, reduce technical debt, and empower non-technical leaders to build and validate software solutions, thereby accelerating innovation cycles.

The days of developers working in isolation, manually writing every line of code, are rapidly fading. AI-assisted development tools are transforming the software development lifecycle (SDLC) by providing intelligent assistance at every stage, from conceptualization and coding to debugging and deployment. This doesn’t replace developers but augments their capabilities, allowing teams to deliver higher-quality software faster.

Rapid Prototyping Without Technical Debt

- Idea to Code Faster: AI can generate boilerplate code, suggest API calls, and even create entire functions or components based on natural language descriptions, drastically speeding up initial development.

- Reduced Rework: By assisting with best practices and identifying potential issues early, AI helps mitigate technical debt, improving code quality and maintainability from the outset.

- Empowering Citizen Developers: Low-code/no-code platforms, powered by AI, enable domain experts (e.g., someone from the business side) to create functional applications without deep programming knowledge, fostering innovation across the organization.

Use Cases in AI-Assisted Development

- Code Generation: AI can generate code snippets, functions, or even entire application frameworks based on prompts or existing code context.

- Debugging & Error Detection: AI helps identify bugs, suggest fixes, and explain complex error messages, reducing debugging time.

- Code Refactoring & Optimization: AI can analyze existing code and propose improvements for performance, readability, and security.

- Test Case Generation: AI assists in creating comprehensive unit tests and integration tests, ensuring code reliability.

- Documentation: AI can automatically generate or update code documentation, keeping it synchronized with development.

Tools for AI-Assisted Dev

The market for AI-powered developer tools is booming:

- Replit: An online IDE that integrates AI coding assistance, allowing developers to write, run, and debug code instantly across various languages.

- Google Antigravity (conceptual/internal): Represents Google’s broader efforts in intelligent coding assistance, aiming to provide predictive coding and intelligent debugging.

- Cursor: An AI-first code editor that integrates deeply with GPT-4, offering natural language coding capabilities, smart debugging, and code explanation.

- Lovable (likely a conceptual or niche tool, or a mis-OCR/future concept within the generative AI space for dev): While less common as a direct AI-assisted dev tool compared to the others listed, it could represent emerging platforms focusing on user-friendly, declarative methods for building apps with AI assistance.

Impact on Development Cycles, Innovation Speed, and Team Dynamics

- Faster Time-to-Market: Accelerates product development, allowing businesses to respond more quickly to market demands.

- Reduced Development Costs: By increasing developer productivity, AI assistance can lower the overall cost of software creation.

- Enhanced Creativity: Freeing developers from repetitive coding tasks allows them to focus on more complex architectural challenges and creative problem-solving.

- Democratized Development: Empowers a broader range of individuals to contribute to software creation, bridging the gap between business needs and technical implementation.

Relevance to IoT Product Development and Deployment

For IoT, AI-Assisted Dev is crucial for:

- Firmware Development: Accelerating the creation and optimization of code for resource-constrained IoT devices.

- Cloud Backend Services: Quickly developing powerful, scalable backend services for data ingestion, processing, and device management.

- Edge AI Deployment: Assisting developers in optimizing AI models for deployment on edge devices, where computational resources are limited.

- API Generation: Rapidly generating APIs for seamless integration between IoT devices, platforms, and other enterprise systems.

6. GEO (Generative Engine Optimization): Ensuring AI Visibility and Citation

Generative Engine Optimization (GEO) is the practice of adapting your digital footprint to be discovered, understood, and cited by Generative AI models. Its goal is to ensure your brand and content appear correctly as authoritative sources in AI-generated answers across platforms like Google SGE, Perplexity, SearchGPT, Gemini, and Bing AI.

In the evolving landscape of digital search, the user journey has fundamentally changed. Instead of solely clicking on blue links, users increasingly receive comprehensive, AI-generated answers directly from search engines and chatbots. This shift means that traditional SEO, while still important, is no longer sufficient. GEO emerges as a critical skill to ensure your content is not just found, but actively chosen and cited by AI.

Key Differences Between SEO and GEO

The core distinction lies in the target and measure of success:

| Feature | Traditional SEO (Search Engine Optimization) | Generative Engine Optimization (GEO) |

|---|---|---|

| Primary Goal | Rank web pages in search results, drive organic traffic via clicks. | Be cited as a source in AI-generated answers, ensure direct and accurate information synthesis by AI. |

| User Journey | User queries → Ranked list of links → User clicks to website. | User asks question → AI synthesizes information → AI generates comprehensive answer (often with citations) → User may or may not click. |

| Success Metrics | Keyword rankings, organic traffic, click-through rates (CTR), conversions. | AI citation rate, share of AI voice (mentions vs. competitors), brand mention context/sentiment, citation accuracy, AI-attributed revenue/leads. |

| Content Focus | Keyword optimization, readability for humans, traditional content formats. | Comprehensibility for AI, answer-first structure, factual accuracy, authority, structured data for AI extraction. |

| Technical Focus | Page speed, mobile-friendliness, crawlability, indexability, traditional schema. | Advanced schema markup, entity optimization, extractable content sections, mobile experience (as AI searches are often mobile-first). |

| Authority Indicators | Backlinks, domain authority, E-A-T (Expertise, Authoritativeness, Trustworthiness). | Amplified E-E-A-T, entity knowledge graph signals, cross-platform presence (Wikipedia, Reddit, high-authority news). |

| Output Type | List of web pages. | Synthesized answers, summaries, conversational responses, often with direct inline citations. |

| Traffic Impact | Direct clicks to website. | Influences “zero-click” searches, brand awareness, indirect traffic from follow-up questions, and improved pipeline quality. |

Why GEO Matters Now

- Market Adoption: Early Google Search Generative Experience (SGE) users report positive experiences, and platforms like ChatGPT process billions of queries daily.

- Citation Opportunity: SGE responses average 3.7 sources, creating new visibility opportunities for your content.

- Traffic Evolution: While “zero-click searches” are common, leading to potentially less direct traffic, AI visibility shapes brand perception, builds authority, and influences users at critical decision points, eventually improving pipeline quality by 240% for early adopters.

- Competitive Advantage: Few companies have fully dedicated resources to GEO. Early adoption offers a significant advantage as traditional SEO approaches become less effective in generative search.

Google SGE Optimization Strategies

Google’s SGE generates comprehensive answers by analyzing and synthesizing content from multiple high-authority sources. Optimizing for SGE requires:

- Comprehensive Topic Coverage: Create pillar pages that thoroughly address core topics, including related subtopics, FAQs, different perspectives, and practical examples.

- Authority and Expertise Signals (E-E-A-T): SGE prioritizes content from authoritative sources.

- Demonstrate clear author credentials and bios.

- Cite reputable sources and studies.

- Showcase industry recognition, awards, and original research.

- Content Freshness and Updates: Regularly audit and update content with current statistics, data, and recent developments. Update publication dates to signal freshness.

- Answer-First Structure: Start each content piece or a key section with a direct, concise answer (40-60 words) to the primary question.

- Question-Based Headers: Structure your content using natural language questions as headings (e.g., “How Does GEO Differ from Traditional SEO?”). This makes content highly snippable for AI.

- Extractable Sections: Break down content into short paragraphs, bullet points, and numbered lists to make it easy for AI to pull specific snippets.

- Schema Markup: Implement rich structured data like

FAQPage,HowTo, andQAPageto explicitly label your content for AI comprehension.

AI Answer Engine Optimization (Multi-Platform)

Beyond Google SGE, optimizing for specific AI systems is crucial:

- ChatGPT and GPT-based Systems: Prioritize clear, logical content structure; comprehensive topic coverage; factual accuracy with source attribution; and regular updates. High-authority domain signals are vital.

- Bing AI and Copilot: These are strongly connected to search rankings and emphasize recent, trending content. Multi-media integration, local optimization, and social signals also play a role.

- Overall Multi-Platform Presence: AI systems scan the entire web. A consistent presence across your blog, videos, podcasts (with transcripts), and social media boosts authority signals and increases citation likelihood.

Entity-Based SEO for GEO Success

AI systems understand content through entities (people, places, things, concepts) and their relationships within knowledge graphs. Entity optimization is foundational for GEO:

- Understanding Entities: Focus on clarifying the properties, attributes, and relationships of key entities within your content.

- Entity Association and Mentions: Consistently mention industry leaders, authoritative organizations, and established concepts. Link to Wikipedia entries for key entities and use entity-rich language naturally.

- Knowledge Graph Integration: Research how entities are represented in Google’s Knowledge Graph and align your content to fill knowledge gaps. Use schema markup to explicitly define entities.

- Co-occurrence and Context Optimization: Place related entities near each other. Create topic clusters around entity relationships and use contextual keywords to strengthen AI understanding.

Measuring GEO Success and ROI

Tracking GEO effectiveness involves new AI-specific metrics:

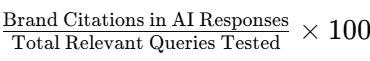

AI Citation Rate: Formula:

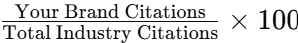

Share of AI Voice: Formula:

This compares your visibility to competitors.

- AI-Attributed Revenue Impact: Calculated by tracking conversions and revenue specifically linked to AI-generated traffic or exposure.

- Brand Mention Frequency, Context, and Sentiment: Track how often your brand is mentioned, in what context, and whether the sentiment is positive.

- AI Search Volume: Proprietary metrics that reflect the estimated popularity of conversational queries in AI tools, helping guide content creation for generative search.

Essential GEO Tools and Platforms:

- AI Monitoring and Analytics Platforms: Tools like Ahrefs BrandRadar, Semrush AI Toolkit, Profound, and Writesonic GEO Platforms track AI mentions, analyze sentiment, and offer content optimization recommendations.

- DataForSEO’s AI Optimization API Suite: Provides

LLM Responses APIto fetch structured AI responses with source citations, andLLM Mentions APIto assess brand mention frequency and context. - Google Analytics 4: Configure custom events and dimensions to track AI-attributed traffic, user behavior, and conversion rates from AI sources.

Common GEO Mistakes to Avoid

- Treating GEO Like Traditional SEO: Over-reliance on keyword stuffing or general SEO tactics without adapting for AI comprehension. Focus on natural language and genuine value.

- Incomplete Answer Coverage: Providing only primary answers without addressing related or follow-up questions.

- Ignoring Platform-Specific Preferences: Applying a “one-size-fits-all” content strategy across diverse AI platforms.

- Lack of Author Attribution & Insufficient Source Citations: AI values expertise and trustworthiness. Always include author credentials and cite credible sources.

- Missing Schema Markup: Neglecting structured data or using incorrect schema types prevents AI from easily extracting and understanding your content.

The Future of GEO

The landscape is continuously evolving:

- Multimodal AI Evolution: Generative engines will process and deliver responses across text, images, video, and audio. Optimizing media assets and providing transcripts for audio/video will be crucial. (e.g., Google Lens, voice search growth).

- Platform-Specific Evolution: New players and integrations will emerge (e.g., Apple’s AI search integration, Microsoft’s investments linking Bing and ChatGPT). A diversified GEO strategy across platforms will be vital to avoid over-reliance on a single channel.

Tools for GEO

- Ahrefs: A leading SEO tool that is developing capabilities for AI visibility tracking.

- SEMrush: Offers comprehensive SEO and competitive analysis, with an evolving AI toolkit for GEO.

- Searchable: Represents the broader category of tools and strategies focused on making digital content easily discoverable and extractable by generative AI for citation.

Relevance to IoT

For IoT, GEO is crucial for:

- Product Visibility: Ensuring that when potential customers or B2B clients ask AI about “best smart sensors for industrial applications” or “IoT platforms for smart cities,” your products and solutions are cited.

- Thought Leadership: Positioning your organization as an authority in IoT by being a frequently cited source for complex questions about IoT security, data analytics, and ethical AI in IoT.

- Market Education: When AI answers fundamental questions about IoT, ensuring your content is the basis helps to shape the narrative and educate the market about your approach and innovations.

Integrating AI Skills into Your IoT Strategy

The six AI skills discussed—Prompt Engineering, AI Workflow Automation, Generative Media, RAG Systems, AI-Assisted Dev, and GEO—are not isolated competencies. They form a synergistic framework that, when integrated, can redefine how IoT solutions are conceptualized, developed, deployed, and marketed.

- Imagine an IoT company that uses Prompt Engineering to refine AI models for predicting sensor anomalies, then leverages AI Workflow Automation to automatically trigger maintenance protocols and notify relevant personnel when such anomalies are detected.

- Concurrently, AI-Assisted Dev accelerates the development of advanced algorithms for these predictive models, pushing updates to edge devices faster and with less technical debt.

- RAG Systems enable internal teams to query vast datasets of device logs, customer feedback, and industry standards, grounding all AI insights in verifiable, up-to-date information.

- Upon releasing new features or products, Generative Media tools rapidly produce engaging marketing campaigns and educational content across multiple channels.

- Finally, GEO ensures that when industry stakeholders, potential clients, or even other AI systems query information about the latest IoT innovations or best practices, this company’s authoritative content is consistently cited, reinforcing its market leadership and expertise.

This holistic integration not only enhances operational efficiency and accelerates innovation within the IoT ecosystem but also dramatically improves market visibility and brand authority in an AI-first world.

Conclusion: Embracing the AI-Driven Future

The digital landscape is undergoing a profound transformation, spearheaded by advancements in Artificial Intelligence. The six skills outlined in this guide—Prompt Engineering, AI Workflow Automation, Generative Media, RAG Systems, AI-Assisted Dev, and Generative Engine Optimization—represent the vanguard of competencies required for success by 2026.

Mastering these skills means moving beyond mere passive consumption of AI tools to actively shaping, directing, and optimizing AI for strategic advantage. For professionals and businesses in the dynamic field of IoT, this mastery is particularly crucial. It enables the creation of more intelligent, efficient, and impactful solutions while ensuring market visibility and thought leadership in an increasingly AI-driven information ecosystem.

The journey to AI mastery is ongoing, demanding continuous learning and adaptation. However, by focusing on these foundational AI skills, individuals and organizations can confidently navigate the complexities of the AI-powered future, transforming challenges into opportunities and securing their place at the forefront of innovation.

Frequently Asked Questions (FAQs)

What is Prompt Engineering?

Prompt Engineering is the specialized skill of designing and refining inputs (prompts) for AI models to elicit precise, desired and high-quality outputs. It involves structuring complex logic and providing strategic context to guide AI responses, moving beyond simple queries to solve complex problems and support high-level decision-making.

How does AI workflow automation help businesses?

AI workflow automation helps businesses by orchestrating intelligent AI agents to manage and execute repetitive enterprise processes autonomously. This shifts the focus from simply automating singular tasks to managing entire intelligent systems, leading to significant increases in efficiency, scalability, real-time responsiveness, and error reduction across operations.

What is Generative Media used for?

Generative Media is used to produce various forms of content—text, images, video, and audio—using AI models. Its primary applications include rapidly scaling corporate communication and marketing content, reducing production costs, accelerating creative cycles, and enabling personalized content creation at scale.

Why are RAG systems important for enterprise AI?

RAG (Retrieval Augmented Generation) systems are crucial for enterprise AI because they unify proprietary internal data with public AI models. By retrieving relevant, trusted information from a company’s private knowledge base before generating responses, RAG systems significantly reduce AI hallucinations, ensure factual accuracy, enable access to real-time and proprietary data, and enhance user trust through source attribution.

How does AI-Assisted Dev speed up development?

AI-Assisted Development speeds up development by leveraging AI tools for tasks such as code generation, debugging, testing, and optimization. This accelerates rapid prototyping, reduces technical debt by improving code quality, and empowers even non-technical stakeholders to contribute to software solutions, ultimately leading to faster time-to-market and reduced development costs.

What is the difference between SEO and GEO?

SEO (Search Engine Optimization) focuses on ranking web pages in traditional search results (like Google’s blue links) to drive organic traffic through clicks. GEO (Generative Engine Optimization), on the other hand, aims to optimize content so that it is discovered, understood, and cited as an authoritative source in AI-generated answers and summaries (like Google SGE or ChatGPT). While SEO seeks clicks, GEO seeks citations and comprehensive understanding from AI, influencing “zero-click” search outcomes and brand perception.