In the modern digital landscape, data is the lifeblood of every successful organization. From optimizing business operations to delivering personalized customer experiences and fueling AI-driven innovations, the ability to effectively manage, process, and analyze vast quantities of information is no longer a luxury but a fundamental necessity. However, the sheer volume, velocity, and variety of data today present complex challenges for businesses seeking to truly harness its potential. This is where data architecture comes into play.

A robust data architecture isn’t about collecting every piece of data; it’s about strategically designing systems that efficiently capture, store, process, and deliver meaningful insights tailored to specific business needs. The truth is, there’s no universal “best” data architecture. Instead, organizations must carefully evaluate their unique requirements—including scale, latency demands, governance models, and business maturity—to select the pattern that best aligns with their goals.

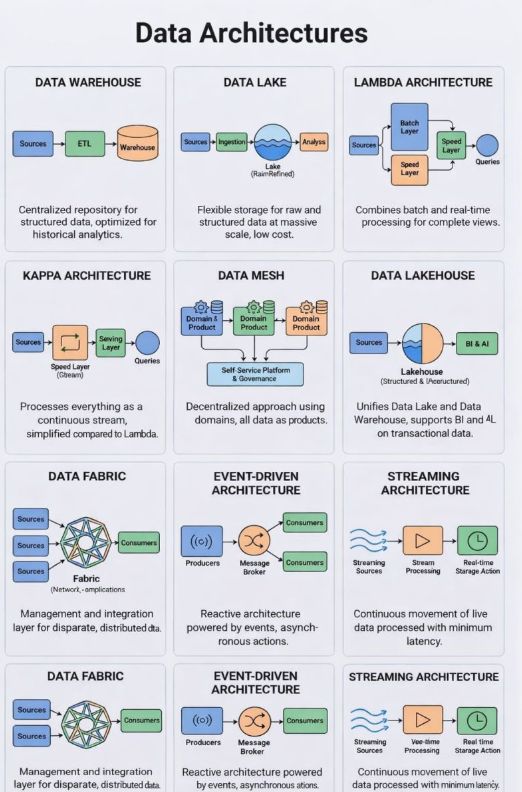

This comprehensive guide will explore various data architectural patterns, breaking down their core characteristics, ideal use cases, and how they address different business and technical challenges. By understanding these diverse approaches, you’ll be better equipped to make informed decisions for your organization’s data strategy.

The Foundation: Understanding Core Data Paradigms

Before diving into specific architectures, it’s essential to understand the fundamental concepts that underpin many of these patterns: the data warehouse and the data lake. These two paradigms often serve as foundational components or points of comparison for more advanced architectures.

Data Warehouse: The Structured Repository for Historical Analytics

At its core, a data warehouse is a centralized repository specifically designed for storing structured, historical data. Its primary purpose is to facilitate business intelligence (BI) and reporting by providing optimized access for analytical queries.

Characteristics of a Data Warehouse

- Structured Data Focus: Data warehouses primarily deal with structured data, meaning data that adheres to a predefined schema (e.g., relational databases). This structure allows for efficient querying and predictable results.

- ETL Process: Data is typically ingested into a data warehouse using an Extract, Transform, Load (ETL) process. This involves extracting data from operational systems, transforming it into a clean, consistent format, and then loading it into the warehouse. The transformation step ensures data quality and conformity to the warehouse’s schema.

- Schema-on-Write: The schema is defined before data is loaded. All incoming data must conform to this schema, ensuring high data quality and consistency from the outset.

- Optimized for Read Performance: Data warehouses are optimized for reading and analyzing large volumes of historical data, making them ideal for complex analytical queries that support BI and reporting dashboards.

- Historical Context: They store historical data over long periods, allowing for trend analysis, comparative reporting, and a comprehensive view of past business performance.

Ideal Use Cases for a Data Warehouse

- Business Intelligence (BI) and Reporting: Generating financial reports, sales performance dashboards, customer churn analysis, and other high-level business insights.

- Regulatory Compliance: Storing auditable historical data for compliance and reporting purposes.

- Enterprise Analytics: Providing a single source of truth for organizational data, enabling consistent metrics and reporting across different departments.

Limitations

- Rigidity: The strict schema can make it difficult to incorporate new, unstructured, or semi-structured data sources without significant re-engineering.

- Cost: Storing large volumes of data, especially with high computational demands for ETL and querying, can be expensive.

- Limited for Advanced Analytics: While good for traditional BI, data warehouses are not typically optimized for data science, machine learning (ML), or real-time analytics due to their batch-oriented nature and structured data focus.

Data Lake: The Flexible Storage for Raw and Scaled Data

In contrast to the data warehouse, a data lake offers flexible storage for raw and structured data at massive scale and low cost. It’s designed to accommodate diverse data types and is particularly well-suited for advanced analytics and machine learning workloads.

Characteristics of a Data Lake

- Raw Data Storage: Data lakes can store data in its native format, regardless of its structure. This includes structured, semi-structured (e.g., JSON, XML), and unstructured data (e.g., text files, images, videos, audio).

- Schema-on-Read: The schema is applied when data is read, not when it’s loaded. This “schema-on-read” approach provides immense flexibility, allowing users to define schema as needed for specific analysis, without rigid upfront design constraints.

- Cost-Effective Storage: Data lakes typically leverage low-cost object storage (e.g., Amazon S3, Azure Blob Storage, Google Cloud Storage), making it economically feasible to store vast quantities of raw data indefinitely.

- Flexible Ingestion: Data can be ingested through various methods, including batch, streaming, and real-time, often using simple ingestion mechanisms without extensive pre-processing.

- Supports Advanced Analytics: Its ability to store raw, diverse data makes it an excellent foundation for data scientists and machine learning engineers who need access to raw datasets for experimentation, model training, and feature engineering.

Ideal Use Cases for a Data Lake

- Machine Learning and AI: Providing raw data for training complex ML models, including deep learning on unstructured data.

- Big Data Exploration: Allowing data scientists to explore vast datasets without predefined schemas, discovering hidden patterns and correlations.

- Real-time Analytics (with processing layers): While primarily storage, data lakes can be combined with streaming processing engines to support real-time analytics on ingested data.

- Data Archiving: Cost-effectively storing all organizational data for future analysis or compliance, even if its immediate use case isn’t clear.

Limitations

- “Data Swamps”: Without proper governance and metadata management, data lakes can quickly become “data swamps”—repositories of disorganized and untagged data that are difficult to navigate and use.

- Data Quality Challenges: The flexibility of “schema-on-read” means that data quality issues are often pushed downstream, requiring more effort during analysis.

- Performance for BI: Not typically optimized for fast interactive BI queries unless data is extracted, transformed, and loaded into a more structured format or specialized tools are used.

Hybrid and Specialized Architectures: Blending and Evolving Paradigms

As the demands for data processing grew, and the limitations of standalone data warehouses and data lakes became apparent, new architectural patterns emerged. These often combine the strengths of existing approaches or introduce entirely new concepts to address specific complexities.

Lambda Architecture: Batch and Speed for Complete Views

The Lambda Architecture is designed to handle both batch and real-time processing for complete and accurate views of data. It addresses the challenge of providing immediate insights from streaming data while also guaranteeing the accuracy and completeness derived from historical batch processing.

Components of Lambda Architecture

- Batch Layer: This layer processes all historical data in batches, producing accurate and comprehensive results. It’s responsible for pre-computing views that integrate all available historical data. The output of this layer is typically stored in a batch view or master dataset.

- Speed Layer (Serving Layer): This layer handles real-time data streams, providing low-latency, incremental updates. It processes new data as it arrives, compensating for the latency of the batch layer by providing immediate, albeit potentially approximate, results.

- Serving Layer (Query Layer): This layer merges the results from both the batch and speed layers, allowing applications to query the data and get a complete view. It provides access to both the historically accurate batch views and the near real-time updates from the speed layer.

How it Works

Sources generate data, which is fed into both the batch layer and the speed layer. The batch layer works on the complete historical dataset to produce comprehensive results. Simultaneously, the speed layer processes new data as it arrives to provide immediate results. Both layers feed into a query layer, providing a unified view that combines the accuracy of batch processing with the immediacy of real-time processing.

Ideal Use Cases for Lambda Architecture

- Real-time Analytics with Historical Context: Scenarios requiring both immediate data insights and the ability to reconcile these with a complete, accurate historical record. Examples include fraud detection (real-time alerts) combined with historical transaction analysis.

- Hybrid Data Processing Needs: Organizations that need sub-second latency for certain analyses but also depend on historical, complete data for other applications.

- Reporting and Dashboards: Providing dashboards that show both current operational status and historical trends.

Limitations

- Complexity: Maintaining two separate processing paths (batch and speed) for essentially the same data can introduce significant architectural and operational complexity.

- Code Duplication: Often requires writing and maintaining similar data processing logic in two different frameworks, one for batch and one for streaming.

- Data Reconciliation: Managing the reconciliation of results between the batch and speed layers can be challenging.

Kappa Architecture: Everything as a Stream

The Kappa Architecture simplifies the Lambda model by processing all data as a continuous stream. It aims to achieve the same goals as Lambda but with a more streamlined approach, making it ideal for streaming-first systems.

Characteristics of Kappa Architecture

- Stream-First Approach: All data, whether historical or real-time, is treated as a continuous stream of events. This means that even historical data processing is conceptualized as re-processing an entire stream of events from the beginning.

- Single Processing Engine: Eliminates the need for separate batch and speed layers by using a single stream processing engine capable of re-processing historical data.

- Immutable Event Log: Relies heavily on an immutable, append-only event log (like Apache Kafka) as the central data store. All data transformations are applied to this log, and new views are derived by re-processing the stream.

How it Works

Data sources feed directly into a speed layer (stream processing engine), which typically reads from an immutable message broker or log. If historical data needs to be re-processed (e.g., due to a change in business logic or model retraining), the stream processing engine simply reads the entire event log from the beginning and applies the new logic. The output is then served through a serving layer for queries.

Ideal Use Cases for Kappa Architecture

- Streaming-First Systems: Environments where real-time data is paramount, and the operational model is intrinsically stream-oriented.

- Simplicity Over Redundancy: Organizations looking to reduce the operational overhead and complexity associated with maintaining separate batch and speed layers in a Lambda Architecture.

- Evolving Business Logic: When data processing logic is expected to change frequently, Kappa allows for easier re-processing of historical data without altering the source data.

Limitations

- Re-processing Time: Re-processing very large historical datasets as a stream can still be time-consuming and resource-intensive, potentially impacting real-time queries if not properly managed.

- Complexity for Non-Streaming Data: While designed for streams, integrating traditional batch sources into a purely stream-based paradigm might require initial adaptations.

Data Mesh: Domain-Oriented Ownership

Data Mesh is a decentralized architectural paradigm that shifts the focus from centralized data ownership to domain-oriented ownership, treating data as a product. It addresses the challenges of scalability and extensibility in large, complex organizations with diverse data needs.

Principles of Data Mesh

- Domain Ownership: Data responsibility is decentralized to cross-functional teams aligned with specific business domains (e.g., “Customer,” “Product,” “Sales”). Each domain team is responsible for its data, from ingestion to serving.

- Data as a Product: Data exposed by each domain is treated as a product, meaning it must be discoverable, addressable, trustworthy, self-describing, interoperable, and secure. This product thinking ensures high-quality, readily usable data assets.

- Self-Serve Data Platform: Provides an underlying platform that offers common capabilities (e.g., data ingestion, storage, processing, governance tooling) to domain teams, enabling them to create and manage their data products efficiently without deep infrastructure expertise.

- Federated Computational Governance: Governance is distributed across domains, with global policies and standards enforced computationally and automatically, while local implementation details are managed by domain teams.

Benefits of Data Mesh

- Scalability: Avoids central bottlenecks common in monolithic data architectures, allowing for independent scaling of data product development.

- Agility: Domain teams can rapidly develop and deploy new data products, responding quickly to business needs.

- Ownership and Accountability: Clear ownership leads to higher data quality and better understanding of data semantics within each domain.

- Reduced Central IT Burden: Decentralizes data development, freeing central data teams to focus on platform enablement.

Ideal Use Cases for Data Mesh

- Large, Complex Organizations: Especially those struggling with scalability, data silos, and slow delivery from a centralized data team.

- Data-Driven Enterprises: Businesses where data is critical across many different functional areas and products.

- Decentralized Operating Models: Organizations with autonomous teams already aligned to business domains.

Limitations

- Organizational Shift: Requires a significant change in organizational structure, culture, and mindset towards data ownership.

- Initial Investment: Building a robust self-serve platform and implementing federated governance can require substantial initial investment.

- Interoperability Challenges: Ensuring seamless interoperability and consistent quality across disparate data products from different domains can be complex.

Data Lakehouse: Unifying Lake and Warehouse Capabilities

The Data Lakehouse architecture is a composite design that aims to combine the flexibility and low-cost storage of a data lake with the structured management and ACID (Atomicity, Consistency, Isolation, Durability) transaction capabilities of a data warehouse. This approach seeks to provide the best of both worlds, supporting both BI and AI workloads on transactional data.

Core Principle of Data Lakehouse

The key enabler of a data lakehouse is the use of open table formats (e.g., Delta Lake, Apache Iceberg, Apache Hudi). These formats bring powerful data warehousing features directly to data stored in a data lake, such as:

- ACID Transactions: Ensures data integrity during concurrent read/write operations, a hallmark of traditional databases and data warehouses.

- Schema Enforcement and Evolution: Allows for strict schema guarantees while also supporting flexible schema changes without breaking downstream applications.

- Data Versioning and Time Travel: Enables access to historical versions of data, facilitating rollbacks, audits, and reproducible experiments.

- Performance Optimizations: Features like indexing, caching, and data skipping improve query performance, making data lakes more suitable for BI workloads.

How it Works

Data, whether structured, semi-structured, or unstructured, is ingested and stored in a data lake, typically using a cloud object storage service. Open table formats are then applied to this data, enriching it with transactional capabilities and schema management. This allows the data in the lake to be directly queried by BI tools (like a data warehouse) while also remaining accessible in its raw form for AI/ML workloads (like a data lake).

Ideal Use Cases for a Data Lakehouse

- Consolidated Data Platform: Organizations looking to simplify their data architecture by managing both structured and unstructured data in a single platform.

- Hybrid BI and AI Workloads: Businesses that need to run traditional BI reports and dashboards alongside advanced AI/ML models on the same underlying data.

- Real-time Analytics with Transactional Integrity: Supporting real-time data ingestion and processing with guarantees similar to a transactional database.

- Cost Optimization: Leveraging inexpensive object storage while gaining the analytical capabilities of a data warehouse.

Advantages Over Lake and Warehouse

- Single Source of Truth: Eliminates data duplication and synchronization issues between data lakes and data warehouses.

- Flexibility and Performance: Offers the flexibility of a data lake for raw data and AI workloads, combined with the performance and reliability of a data warehouse for BI.

- Reduced Complexity: Potentially simplifies the overall data architecture and reduces operational overhead.

Data Fabric: Integration Across Distributed Systems

A Data Fabric is an architectural approach that provides a unified, intelligent, and integrated platform spanning across diverse data sources, environments, and consumers. It focuses on metadata-driven orchestration and governance to create a seamless experience for accessing and managing distributed data.

Core Principles of Data Fabric

- Unified Access Layer: Provides a single point of access and control over data residing in various, often disparate, systems (on-premises, cloud, multiple clouds).

- Metadata Driven: Leverages extensive metadata (technical, business, operational) to automate data discovery, integration, transformation, and governance.

- Intelligent Automation: Uses AI and machine learning to automate data management tasks, such as data preparation, quality checks, and anomaly detection.

- Active Governance: Implements continuous, policy-driven governance across all data sources, ensuring compliance, security, and quality.

- Distributed Architecture: Designed to manage data across a hybrid and multi-cloud landscape, not to centralize it physically but to logically unify it.

How it Works

The data fabric doesn’t physically move all data into a single repository. Instead, it creates a virtualized layer that connects to various data sources. Through metadata management, data integration, data orchestration, and intelligent automation, it allows users and applications to discover, access, and utilize data as if it were in one place, applying consistent governance and security policies across the entire landscape.

Ideal Use Cases for Data Fabric

- Highly Distributed Data Environments: Organizations with data scattered across numerous disparate systems, on-premises data centers, private clouds, and multiple public clouds.

- Complex Data Integration Needs: When integrating data from a wide variety of sources with different formats and protocols.

- Enhanced Data Governance and Compliance: For enterprises needing to enforce consistent data governance, security, and compliance policies across a sprawling data landscape.

- Self-Service Data Access: Empowering business users and data scientists to discover and access relevant data more easily.

Benefits of Data Fabric

- Reduced Integration Complexity: Simplifies the process of integrating data from heterogeneous sources.

- Improved Data Discoverability: Makes it easier for users to find and understand available data assets.

- Consistent Governance: Applies uniform data governance and security rules across all data, regardless of its location.

- Agility and Flexibility: Adapts to evolving data sources and consumption patterns without requiring massive data migration projects.

Event-Driven Architecture: Reactive and Asynchronous Systems

An Event-Driven Architecture (EDA) is a design pattern focusing on the production, detection, consumption of, and reaction to events. It’s a key paradigm for building scalable, reactive, and resilient distributed systems.

Core Components of EDA

- Event Producers: Systems or components that generate events, representing a significant change of state (e.g., “order placed,” “user registered,” “sensor reading received”).

- Message Broker (Event Bus): A central communication layer that ingests events from producers and makes them available to consumers. Common examples include Apache Kafka, RabbitMQ, and cloud-native messaging services. It decouples producers from consumers.

- Event Consumers: Systems or services that subscribe to specific event types from the message broker and react to them by performing actions or updating their own state.

How it Works

When an event occurs in a producer system, it publishes this event to a message broker. The message broker then reliably delivers this event to all interested consumers. Consumers act independently, processing events relevant to them. This asynchronous communication model allows different parts of a system to operate independently, increasing fault tolerance and scalability.

Ideal Use Cases for Event-Driven Architecture

- Real-time Analytics and Insights: Processing streams of events to derive immediate business insights (e.g., fraud detection, personalized recommendations, IoT data processing).

- Microservices Communication: As a robust and scalable communication mechanism between loosely coupled microservices.

- User Activity Tracking: Building audit trails, tracking user behavior on websites/apps, and triggering personalized actions.

- IoT Data Ingestion: Handling large volumes of sensor data from IoT devices.

Benefits of EDA

- Decoupling: Producers and consumers are loosely coupled, meaning changes in one system have minimal impact on others.

- Scalability: Systems can scale independently; consumers can be added or removed without affecting producers.

- Resilience: The message broker provides durability, ensuring events are not lost even if consumers are temporarily unavailable.

- Real-time Responsiveness: Enables systems to react to changes and events in near real-time.

Streaming Architecture: Low-Latency Continuous Processing

Streaming Architecture is a specialized form of data architecture designed for processing continuous flows of data with minimal latency. While related to Event-Driven Architecture (which focuses on events as triggers), Streaming Architecture specifically emphasizes the continuous computation and transformation of data in motion.

Core Principle of Streaming Architecture

The core idea is to process data as it arrives rather than storing it first and then processing it in batches. This “data in motion” approach is critical for use cases that require immediate reactions and insights.

Components of Streaming Architecture

- Streaming Sources: Any system generating continuous data streams (e.g., IoT sensors, user clicks, financial transactions, log files).

- Stream Processing Engine: A specialized software framework designed to perform operations on unbounded streams of data (e.g., Apache Flink, Apache Spark Streaming, Kafka Streams). These engines can perform filtering, aggregation, transformation, and enrichment in real-time.

- Real-time Storage/Action: The output of the stream processing engine can be stored in a low-latency database, fed into a real-time analytics dashboard, trigger alerts, or initiate automated responses.

How it Works

Data flows from streaming sources into a stream processing engine. This engine continuously ingests, processes, and analyzes the data. The results are then either stored in real-time accessible storage (like a key-value store or in-memory database) or used to trigger immediate actions (e.g., sending an alert, updating machine learning models, controlling an IoT device).

Ideal Use Cases for Streaming Architecture

- Real-time Fraud Detection: Identifying suspicious transactions as they occur.

- IoT Monitoring and Control: Monitoring sensor data for anomalies and triggering automated responses in industrial or smart home settings.

- Personalization and Recommendation Engines: Providing immediate, context-aware recommendations based on current user behavior.

- Network Intrusion Detection: Analyzing network traffic in real-time to identify security threats.

- Algorithmic Trading: Processing market data with sub-millisecond latency for high-frequency trading strategies.

Benefits of Streaming Architecture

- Low Latency: Provides insights and triggers actions with minimal delay.

- Immediate Responsiveness: Enables systems to react instantly to changing conditions.

- Continuous Analytics: Offers always up-to-date views of operational metrics.

- Scalability: Designed to handle high-velocity data streams.

Choosing the Right Data Architecture: A Strategic Approach

The diverse landscape of data architectures highlights a crucial insight: there is no one-size-fits-all solution. The “best” architecture is always the one that most effectively addresses an organization’s specific business context, technical requirements, and strategic goals.

Factors to Consider When Selecting an Architecture

- Data Volume, Velocity, and Variety (The 3 Vs):

- Volume: How much data are you dealing with? Petabytes? Exabytes? This impacts storage choices and processing power.

- Velocity: How fast does your data arrive, and how quickly do you need insights? Real-time vs. daily batch processing will dictate different approaches.

- Variety: What types of data do you have? Purely structured? A mix of structured, semi-structured, and unstructured? This is a major factor in choosing between warehouses, lakes, or lakehouses.

- Latency Requirements: Do you need insights in milliseconds (streaming), seconds/minutes (near real-time), or hours/days (batch)? This will heavily influence the choice between streaming, Kappa, Lambda, or purely batch architectures.

- Data Governance and Compliance: What are your regulatory and internal governance needs? Architectures like Data Mesh and Data Fabric provide robust frameworks for distributed governance, while data warehouses excel at structured compliance reporting.

- Organizational Structure and Maturity: Is your organization centralized or decentralized? Are your teams accustomed to traditional BI or agile, domain-driven development? Data Mesh requires a significant organizational shift, for example.

- Cost Constraints: Different architectures have varying costs associated with storage, compute, and operational overhead. Cloud-native solutions often offer cost-effective scaling for various patterns.

- Skill Set Availability: Do you have the internal expertise to implement and maintain complex architectures like Lambda or Data Mesh, or would a simpler, more managed solution like a Lakehouse be more appropriate?

- Existing Infrastructure: Can you leverage existing tools and investments, or are you building from scratch? Hybrid approaches are common in modern cloud ecosystems.

- Business Drivers: What specific problems are you trying to solve? Are you primarily focused on historical reporting, real-time customer engagement, AI innovation, or breaking down internal data silos?

Hybrid Approaches: The New Norm

In today’s dynamic cloud ecosystems (e.g., Snowflake, Azure, GCP, Databricks, Fabric), pure architectural patterns are becoming less common. Instead, hybrid approaches are increasingly becoming the norm. Organizations often combine elements from several architectures to create a bespoke solution that fits their unique requirements. For example:

- A Data Lakehouse might form the core storage and processing layer, leveraging open table formats for both BI and AI.

- Event-Driven Architectures might feed data into this lakehouse for both real-time operational analytics and historical batch processing.

- Components of Data Fabric could then be overlaid to provide unified access and governance across the hybrid architecture, integrating with other legacy systems.

- Streaming Architectures can extract insights and trigger actions directly from the event streams before the data even lands in the lakehouse.

The key is to understand the strengths and weaknesses of each pattern and then intelligently compose them to build a resilient, scalable, and value-driven data platform.

The Future of Data Architecture

The trajectory of data architecture points towards even greater sophistication, automation, and integration. As data continues to grow in volume and complexity, future architectures will likely emphasize:

- Increased Automation: AI-powered metadata management, automatic data quality checks, and self-optimizing pipelines will reduce manual effort and improve efficiency.

- Real-time Everything: The demand for immediate insights will drive further advancements in streaming processing and low-latency data access.

- Unified Platforms: The trend towards unification, as seen in the Data Lakehouse, will continue, simplifying operations and providing a more cohesive data experience.

- Intelligent Governance: Automated and adaptive governance frameworks will be crucial for maintaining compliance and security in increasingly distributed data environments.

- Edge Computing Integration: As IoT devices proliferate, the ability to process data closer to its source (at the edge) will become a more integral part of overall data architectures, reducing latency and bandwidth usage.

The foundational principles, however, will remain constant: data must be captured, ingested, stored, processed, and used effectively to derive business value. The discipline of understanding these stages and applying the right architectural patterns will be paramount for organizations striving to maintain a competitive edge.

Empower Your Data Strategy with IoT Worlds

Navigating the complexities of modern data architectures requires deep expertise and a clear understanding of your business objectives. Are you struggling to implement a scalable data pipeline? Do you need to accelerate your real-time analytics capabilities? Is your organization grappling with disparate data sources and governance challenges?

At IoT Worlds, we specialize in designing, optimizing, and implementing robust data architectures that transform raw data into actionable intelligence. Our team of experts understands the nuances of Data Warehouses, Data Lakes, Lambda and Kappa Architectures, Data Mesh, Data Lakehouses, Data Fabrics, Event-Driven, and Streaming Architectures. We help you choose the right pattern, or a combination of patterns, to meet your unique scale, latency, governance, and business maturity requirements.

Don’t let architectural complexity hinder your data’s potential. Partner with IoT Worlds to build a future-proof data platform that drives innovation and delivers tangible business value.

Contact us today to discuss your specific data architecture needs and discover how our expertise can accelerate your data journey. Send an email to: info@iotworlds.com.