In an era saturated with data, the true value of “Big Data” isn’t found in merely accumulating vast quantities of information. It resides in the intelligent design and meticulous execution of data pipelines that transform raw, disparate inputs into actionable insights. Many organizations fall into the trap of tool-centric thinking, believing that acquiring the latest data technology will solve their challenges. However, the most successful data platforms understand that technology is merely an enabler. The real differentiator lies in the underlying architecture – a blueprint driven by clarity of purpose, rigorous discipline, and seamless flow.

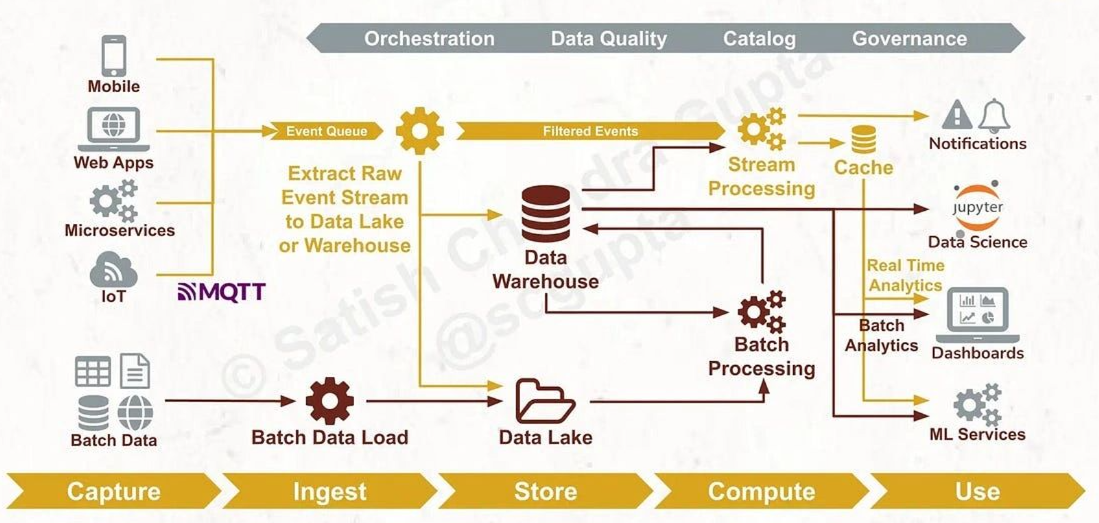

This article delves into the fundamental principles of a robust big data pipeline architecture, guided by a proven framework: Capture, Ingest, Store, Compute, and Use. We will explore how these stages, coupled with essential cross-cutting concerns like Orchestration, Data Quality, Catalog, and Governance, form the backbone of a data ecosystem that is not only performant but also scalable, resilient, and trustworthy.

The Pillars of a Sustainable Data Platform: Beyond the Hype

The allure of real-time dashboards, sophisticated machine learning models, and instant analytics can often overshadow the foundational work required to achieve them. A truly effective data platform does not jump straight to the “use” phase. Instead, it respects a methodical progression, ensuring that each preceding layer is solid and reliable. This systematic approach guarantees that the insights generated are accurate, timely, and impactful.

Capture: The Genesis of Data

The initial stage of any data pipeline is the Capture phase. This is where data originates from a multitude of sources, representing the rawest form of enterprise activity. In a modern data landscape, these sources are incredibly diverse, constantly evolving, and often generating data at high velocities.

Diverse Data Producers

Modern businesses interact with customers, partners, and internal systems through various channels, each acting as a data producer.

- Mobile Applications: User interactions, purchases, clicks, and session data from mobile devices contribute significantly to the data stream. These events are often real-time and provide immediate insights into user behavior.

- Web Applications: Similar to mobile apps, web applications generate a continuous flow of data from user browsing, form submissions, and content consumption. Understanding web traffic patterns is crucial for optimizing online experiences.

- Microservices: The modular nature of microservices architectures means that individual services often emit events detailing operations, status changes, and internal communications. These events are vital for monitoring system health and understanding complex inter-service dependencies.

- IoT (Internet of Things): Perhaps one of the most rapidly expanding data sources, IoT devices – from industrial sensors to smart home gadgets – generate massive volumes of telemetry and event data. Often utilizing protocols like MQTT, these devices push data that can be critical for real-time monitoring, predictive maintenance, and operational efficiency.

Establishing Data Contracts and Schema Evolution

The Capture phase is not merely about collecting data; it’s about establishing the rules of engagement for that data from its very inception.

- Schema Evolution: Data schemas are rarely static. As applications evolve and business requirements change, so do the structures of the data being produced. A robust capture strategy anticipates this evolution, allowing for flexible schema management without breaking downstream processes. Tools and practices that support schema evolution are critical here.

- Data Contracts: Explicitly defined data contracts between data producers and consumers are paramount. These contracts specify the format, types, semantics, and expected quality of the data. Establishing these early in the pipeline prevents misinterpretations, reduces debugging time, and ensures data integrity. Without clear contracts, the reliability of the entire data ecosystem is compromised.

- Scale Considerations: The sheer volume and velocity of data from these diverse sources necessitate scalable mechanisms to handle the initial capture. This means designing systems that can ingest data concurrently from thousands or millions of individual endpoints without becoming a bottleneck.

Ingest: The Data’s First Journey

Once captured, data needs to be transported reliably and efficiently into the core data infrastructure. The Ingest layer is responsible for this crucial step, acting as the gateway for all incoming information. Its design largely dictates the reliability and replayability of the entire pipeline. A weak ingestion layer will inevitably lead to a fragile data platform.

Event Queues and Streaming Pipelines

For high-velocity, real-time data, event queues and streaming pipelines are indispensable.

- Event Queues: Technologies like Apache Kafka or similar messaging queues serve as central hubs for incoming events. They provide durability, fault tolerance, and the ability to handle bursts of data, decoupling producers from consumers. This means that even if downstream systems are temporarily unavailable, data is not lost.

- Streaming Pipelines: More sophisticated streaming platforms can perform initial filtering, routing, and basic transformations on the data as it flows. This pre-processing can reduce the load on subsequent stages and ensure that only relevant data proceeds. The diagram illustrates an “Event Queue” leading to an “Extract Raw Event Stream to Data Lake or Warehouse” component, which can perform this initial filtering, creating “Filtered Events” that feed into stream processing.

Batch Loaders

While streaming is vital for real-time needs, many traditional data sources operate on a batch basis.

- Batch Data Load: For sources like legacy databases, file systems, or external data feeds that are updated periodically, batch loaders are used. These processes typically run on a schedule, collecting and transferring data in larger chunks. The diagram explicitly shows “Batch Data” leading to a “Batch Data Load” component.

Reliability and Replayability

The ingestion layer is where the commitment to data reliability truly begins.

- Guaranteed Delivery: Modern ingestion systems offer various levels of delivery guarantees, often aiming for at-least-once or exactly-once semantics to ensure no data is lost or duplicated.

- Replayability: A critical feature for any robust data platform is the ability to replay historical data. This is invaluable for recovering from errors, backfilling missing data, testing new processing logic, or retraining models. The design of the ingest layer, particularly through durable event queues, directly supports this capability.

Store: The Data Repository

After ingestion, data needs a place to reside. The Store layer is where this crucial function occurs, providing long-term persistence and enabling access for subsequent processing and analysis. A well-designed storage strategy is not about choosing one technology over another; it’s about judiciously blending solutions to achieve separation of concerns.

Raw Event Storage

A fundamental principle of robust data architecture is to always store raw events first.

- Data Lake: The data lake serves as the primary repository for raw, untransformed data. It accepts data in its original format from diverse sources, offering immense flexibility for future analytical needs. Storing raw data ensures that you have an immutable record, allowing for re-processing with new assumptions or algorithms as your understanding of the data evolves. The diagram shows the “Extract Raw Event Stream” feeding directly into a “Data Lake” and “Batch Data Load” also feeding into it.

- Immutability: The raw data in the data lake should ideally be immutable, meaning once written, it cannot be changed. This provides an audit trail and foundational truth for all subsequent data transformations.

Purpose-Built Warehouses

While data lakes are excellent for raw data, structured and refined data finds its home in a data warehouse.

- Data Warehouse: This is where transformed, cleaned, and organized data resides, structured for efficient querying and reporting. It acts as the central source of truth for business intelligence and analytical applications. The diagram shows the “Extract Raw Event Stream” also leading to a “Data Warehouse”, and the “Data Lake” also feeds into the “Data Warehouse” (often after some initial batch processing).

- Separation of Concerns: The coexistence of a data lake and a data warehouse is not redundancy; it’s a strategic separation of concerns. The data lake provides flexibility and archival for raw data, while the data warehouse offers optimized performance and structure for consumption by business users and applications.

Compute: Transforming Data into Insight

The Compute layer is where raw and stored data is transformed, enriched, and aggregated into formats suitable for consumption. Modern data platforms understand that a one-size-fits-all compute approach is insufficient; a blend of streaming and batch processing is essential to meet diverse business requirements.

Streaming for Immediacy

For use cases demanding immediate insights and rapid reactions, streaming computation is vital.

- Stream Processing: This involves processing data continuously as it arrives, enabling near-real-time analytics, anomaly detection, and immediate notifications. The “Filtered Events” from the ingestion layer directly feed into “Stream Processing.” This processed data can then update caches, trigger notifications, or contribute to real-time analytics.

- Low-Latency Operations: Streaming compute is optimized for low-latency operations, performing calculations and transformations on data windows as small as seconds or milliseconds.

Batch for Correctness, Depth, and History

While streaming provides immediacy, batch processing remains critical for comprehensive, historical analysis and ensuring data correctness over large datasets.

- Batch Processing: This involves processing large volumes of historical data at scheduled intervals. It excels at complex transformations, aggregations, and joins across vast datasets, which might be too resource-intensive for real-time streaming. The “Data Lake” and “Data Warehouse” both feed into “Batch Processing.”

- Historical Context and Accuracy: Batch processing is ideal for generating aggregated reports, running deep learning models over historical data, and ensuring the absolute correctness of financial or regulatory data, where eventual consistency is acceptable.

Blending Intentionality

The power of a modern data platform comes from the intentional blending of both streaming and batch compute paradigms. Data often flows through a stream processing pipeline for immediate action, simultaneously being stored in the data lake for later, more thorough batch analysis. This dual approach maximizes both responsiveness and analytical depth.

Use: Delivering Business Value

The ultimate goal of any big data pipeline architecture is to deliver tangible business value. The Use layer represents the various ways in which processed data is consumed by stakeholders and applications. The effectiveness of this layer is directly dependent on the robustness and accuracy of all preceding stages.

Diverse Consumption Patterns

Data consumers have varied needs, from interactive dashboards to automated machine learning predictions.

- Dashboards: Visual representations of key performance indicators (KPIs) and operational metrics provide business users with an accessible view of data-driven insights. The diagram shows “Dashboards” being fed by “Real Time Analytics” and “Batch Analytics.”

- Real-time Analytics: Applications that provide instant feedback and insights, often powered by stream processing outputs. This can include personalized recommendations, fraud detection, or dynamic pricing.

- Data Science and Machine Learning Services: Processed data serves as the foundation for data scientists to build, train, and deploy machine learning models. The diagram illustrates “Jupyter” (a common data science environment) being fed by “Data Warehouse” and “Cache”, and “ML Services” being fed by “Batch Processing” and “Stream Processing.” These services operationalize models for tasks like predictive analysis, customer segmentation, or natural language processing.

- Notifications: Automated alerts and notifications can be triggered by specific events or thresholds identified through stream processing, providing immediate awareness of critical situations.

The Result of Upstream Excellence

The business value generated in the Use phase is a direct reflection of the quality and integrity of the data pipeline upstream. If the Capture, Ingest, Store, and Compute layers are not meticulously designed and maintained, the insights consumed will be flawed, leading to poor decision-making and erosion of trust in the data.

Cross-Cutting Concerns: The Unsung Heroes

Beyond the sequential stages of the data pipeline, several critical cross-cutting concerns underpin the entire architecture. These are not afterthoughts but integral components that ensure the overall health, trustworthiness, and efficiency of the data platform.

Orchestration: The Conductor of the Pipeline

Orchestration is the backbone that sequences, schedules, monitors, and manages the various tasks within the data pipeline. Without effective orchestration, data workflows become chaotic, prone to errors, and difficult to manage.

- Workflow Management: Orchestration tools define the dependencies between tasks, ensuring they execute in the correct order. For example, a batch processing job might only start after a batch data load is complete.

- Scheduling: Tasks are scheduled to run at specific times or intervals, or triggered by events.

- Monitoring and Alerting: Orchestration platforms continuously monitor the execution of workflows, detecting failures or bottlenecks and triggering alerts for human intervention.

- Retries and Error Handling: Robust orchestration includes mechanisms for automatically retrying failed tasks and defining strategies for handling errors gracefully.

Data Quality: Ensuring Trustworthiness

Data quality is not a feature; it’s a continuous process that ensures the data is accurate, consistent, complete, and timely. Poor data quality can undermine the most sophisticated analytics and machine learning models.

- Validation Rules: Implementing checks at various stages to validate data against predefined rules (e.g., ensuring numeric fields contain numbers, checking for valid ranges).

- Cleansing and Standardization: Processes to correct errors, remove duplicates, and normalize data into a consistent format.

- Profiling and Monitoring: Regularly profiling data to understand its characteristics and monitoring for deviations from expected patterns.

- Automated Testing: Integrating data quality tests into the CI/CD pipeline to catch issues early.

Catalog: The Data Directory

A data catalog acts as a centralized inventory of all data assets within the organization, providing metadata, lineage, and context. It is essential for discoverability, understanding, and governance.

- Metadata Management: Storing technical metadata (schemas, data types), business metadata (definitions, ownership), and operational metadata (usage statistics, quality scores).

- Data Discoverability: Enabling users to easily find and understand available data assets.

- Data Lineage: Tracking the origin and transformation history of data, from source to consumption, which is crucial for auditing, debugging, and compliance.

- Glossary and Definitions: Providing a common language and definitions for data elements, reducing ambiguity.

Governance: The Rules of Engagement

Data governance establishes the policies, processes, and responsibilities for managing and protecting data assets. It ensures compliance, security, and ethical use of data.

- Access Control: Defining who can access which data and under what conditions, often integrating with identity and access management systems.

- Security: Implementing encryption, masking, and other security measures to protect sensitive data at rest and in transit.

- Compliance: Ensuring that data handling practices adhere to regulatory requirements (e.g., GDPR, CCPA, HIPAA).

- Auditability: Maintaining a comprehensive audit trail of data access and modifications.

- Data Ownership: Clearly defining ownership and accountability for data assets.

The Core Lesson: Principles Over Tools

The biggest lesson in building robust big data pipeline architecture is a subtle yet profound one: it’s not about the specific tools you use. While technologies like Kafka, Spark, Flink, Snowflake, and dbt are powerful enablers, they are ultimately interchangeable components. The enduring value comes from adhering to fundamental principles.

- Scalability: Designing systems that can gracefully handle increasing volumes of data, users, and complexity without compromising performance.

- Recoverability: Building in mechanisms to recover from failures, whether through retries, replayability of data, or disaster recovery strategies.

- Explainability: Ensuring that the data pipeline is transparent, understandable, and auditable. This means clear data lineage, well-documented transformations, and readily available metadata.

- Trustworthiness: Instilling confidence in the data by prioritizing data quality, security, and governance throughout the entire lifecycle.

These principles form the bedrock upon which any successful data platform is built. Tools will undoubtedly evolve, new technologies will emerge, but the need for effective data flow, rigorous discipline, and clear intent will remain constant.

Why a Well-Architected Pipeline Matters

In today’s fast-paced, data-driven world, the ability to rapidly ingest, process, and analyze massive datasets can be the difference between market leadership and obsolescence. A well-architected big data pipeline offers several critical advantages:

- Accelerated Insights: By streamlining data flow and automating processing, organizations can gain insights faster, enabling quicker decision-making and more agile responses to market changes.

- Enhanced Data Quality and Reliability: Robust pipelines with integrated data quality checks and governance frameworks ensure that the data used for analysis is accurate, consistent, and trustworthy.

- Scalability and Flexibility: A modular and adaptable architecture can easily accommodate new data sources, increased data volumes, and evolving business requirements without requiring a complete overhaul.

- Cost Efficiency: Optimized processing and storage strategies, coupled with efficient orchestration, can reduce operational costs associated with data management.

- Competitive Advantage: Organizations that can effectively leverage their data to understand customer behavior, optimize operations, and innovate new products and services gain a significant edge over competitors.

The Future of Big Data Architecture

As data volumes continue to explode and the demand for real-time insights intensifies, the principles outlined in this architecture will become even more critical. The convergence of stream and batch processing, the increasing emphasis on data governance and quality-by-design, and the continued evolution of cloud-native data services will shape the next generation of data platforms. However, the core philosophy will remain: focus on the flow, discipline, and intent, and the tools will follow.

In conclusion, constructing a robust big data pipeline architecture is an intricate but profoundly rewarding endeavor. It requires a strategic vision that transcends mere tool acquisition, emphasizing a holistic approach to data management. By meticulously designing each stage – Capture, Ingest, Store, Compute, and Use – and integrating essential cross-cutting concerns, organizations can build data platforms that are not just functional, but truly transformative.

Unlock Your Data’s Full Potential

Are you looking to design, optimize, or scale your big data pipeline architecture to drive real-time analytics and unlock deeper insights? At IoT Worlds, our team of experts understands the nuances of building high-performance, resilient data platforms that stand the test of time. From strategizing your data capture mechanisms to implementing advanced stream and batch processing, and ensuring robust governance, we can guide you every step of the way.

Don’t let your data be a challenge; make it your greatest asset.

Contact us today to discuss your specific needs and discover how our expertise can accelerate your data journey.

Send an email to: info@iotworlds.com