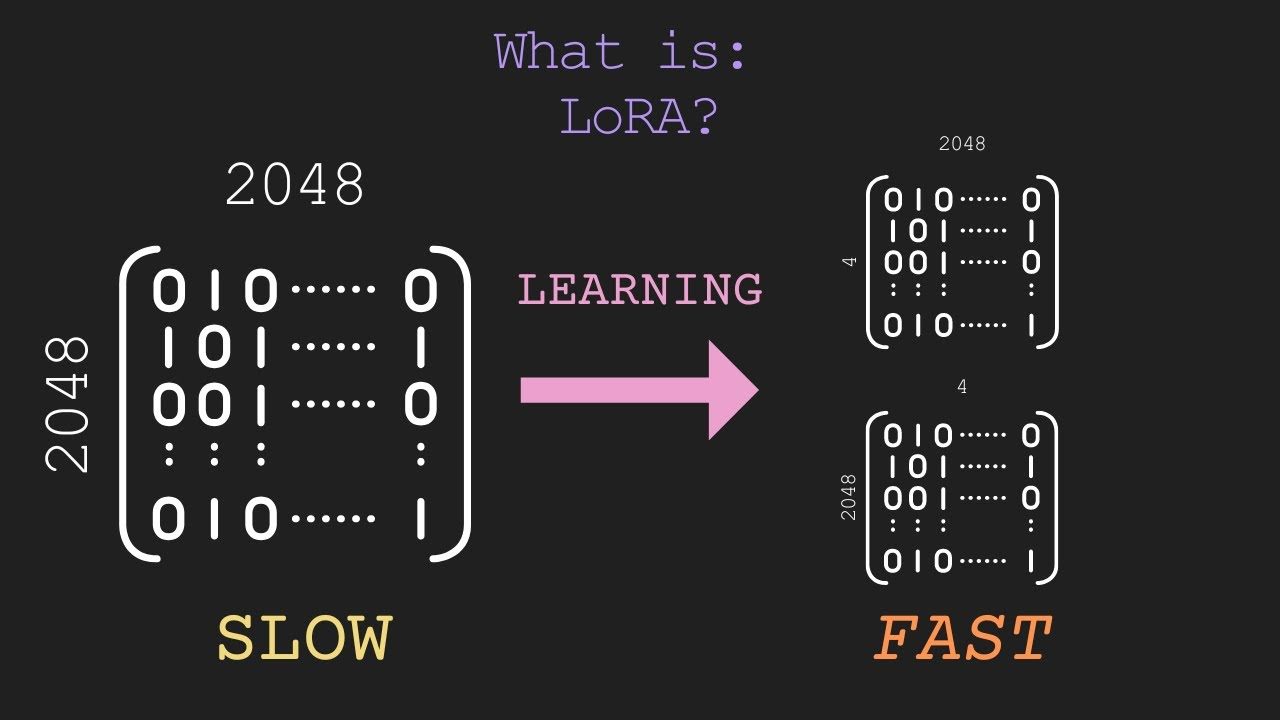

LoRA reduces the number of parameters necessary to fine-tune models, which helps cut training costs and GPU memory requirements by up to 10x while making models more stable and resistant to noise.

Low-rank matrix factorization helps achieve this feat, by reducing the dimensions of large matrices so they can be represented using lower dimensional ones that can be trained faster at less cost than their original versions.

What is Lora?

Adjusting large language models to new tasks often involves fine-tuning their parameters. Unfortunately, this process can be time consuming and costly; typically requiring high performance GPUs at considerable expense and possibly millions of dollars over several iterations. Luckily, there have been efforts underway to make fine-tuning more cost efficient; one such method being Low-Rank Adaptation of Large Language Models (LoRA). LoRA reduces the number of model parameters necessary for fine-tuning while speeding up training times significantly.

LoRA is an approach that utilizes decomposition to adapt a model’s original weight matrix for new data, while simultaneously adapting parameters in order to maintain a low rank structure, defined as linearly independent columns or rows with equal weightings and ranks. Decomposing a model this way can significantly decrease both memory requirements and computational requirements compared to using its original weight matrix directly.

LoRA stands out by being adaptable to existing models without significant modification. For instance, developers can use it on stable diffusion models with billions of parameters to fine-tune it for their specific dataset and achieve greater performance as well as reduced training times.

To use LoRA effectively, you’ll require a stable diffusion model and image dataset; an example can be found by exploring DreamBooth and Pokemon BLIP caption datasets in LoRA’s GitHub repository. Furthermore, an 11GB GPU memory should also be sufficient for use with LoRA.

LoRA stands out by using decomposition to reduce the size of a model’s original weight matrix and make it more manageable, similar to reshaping it with an inverse tensor but with much lower computational overhead.

LoRA allows you to fine-tune a model quickly for any given dataset while saving money on hardware costs. LoRA also improves model stability and reduces overfitting – an issue common among machine learning models that causes them to learn incorrect things from training data – making it a powerful tool for optimizing performance while minimizing resource consumption – an integral component of future AI systems.

Discover the best large language models courses, click here.

Lora is a training technique

Traditional fine-tuning methods involve altering all layers in a model simultaneously; LoRA utilizes selective fine-tuning instead, changing only those transformer layers important for the new task at hand, to streamline adaptation process and save computational resources. Furthermore, LoRA maintains general knowledge in pre-trained models which is particularly advantageous in cases requiring frequent updates or adaptations due to changing datasets.

LoRA can be used to fine-tune various tasks, from instruction following to image generation. The resulting model can then be easily tailored to meet the needs of specific applications without extensive retraining – for instance, new models can be trained to recognize specific speech patterns for applications like voice command recognition; using large datasets is best to train this model effectively.

Key to the training process is marking rare tokens to prevent “concept bleeding.” These tags, or unique identifiers that don’t strongly connect to any existing concepts in your model, help prevent language drift and ensure your model can adapt quickly to new tasks – not overparametrizing and improving performance! Adding these tags in training helps prevent language drift while simultaneously improving performance.

Use of LoRA reduces the computational load associated with fine-tuning an LLM by employing low-rank matrices to update it, thus decreasing its size and memory requirements. Furthermore, this approach allows faster iterations and experiments since model adaptation occurs quicker.

This method uses rank decomposition of update matrix DW to generate two lower-rank matrices A and B, which are multiplied together to modify original weights. Their rank is limited to a certain value to restrict their impact on output while being initialized with zero values which further minimize their influence over original weights, providing more cost-efficient fine-tuning of an LLM for practical deployments.

Lora is a fine-tuning technique

When fine-tuning large language models for new tasks, it is critical to keep their low rank structure unchanged. One approach for accomplishing this goal is LoRA (Low Rank Adaptation). As part of its fine-tuning process, LoRA updates model parameters so they preserve this characteristic – helping reduce both the number of parameters and computational burden associated with full fine-tuning processes.

The method employs matrix decomposition to update a model’s weights during training, providing faster training times and more efficient memory use. Furthermore, eliminating storage requirements associated with original model parameters reduces storage requirements as well as computational costs significantly and results in a more accurate model with greater generalization capabilities.

This method can help to enhance the performance of state-of-the-art language models such as OpenAI’s GPT and Meta’s LLaMA by increasing their ability to handle real world data and tasks, creating models with better instructions following and natural speech understanding capabilities. Training begins by creating an extensive dataset with instructions and responses either manually curated or through automated tools like ChatGPT before fine-tuning using LoRA to make it more adept at following instructions and answering questions.

LoRA provides another advantage by maintaining the core structure and knowledge of a pre-trained model, unlike traditional fine-tuning techniques that may compromise its generalization and stability. Instead, LoRA only modifies weights through low-rank matrices so as to minimize impact on generalization and stability.

LoRA allows for much quicker adaptation to new data and domains than other methods, while requiring less memory and processing power compared with other fine-tuning techniques – saving both time and money for organizations with limited resources. In addition, LoRA typically requires fewer iterations or experiments before yielding results that meet expectations.

Successful fine-tuning requires a carefully curated dataset with an obvious theme or subject matter. Images from different subjects should be included to prevent overfitting, which occurs when models become too closely tailored to a single data set.

Discover the best large language models courses, click here.

Lora is a tool

LoRA has quickly been adopted by the open-source AI community as an effective way of fine-tuning large language models and adapting them for specific tasks. LoRA reduces trainable parameters by several orders of magnitude while using fractions of original model weights, helping avoid overfitting while preserving generalization abilities while improving performance in specific use cases. Plus, LoRA runs on less powerful hardware – making it ideal for businesses that wish to deploy large foundation models but can’t afford expensive compute time to train and fine-tune them.

Traditional fine-tuning methods involve updating all model weights during adaptation, creating an enormous computational burden. By contrast, LoRA allows only updating one layer at a time via low-rank update matrices – thus drastically decreasing data requirements as well as training time and GPU memory usage.

Additionally, update matrix size can be controlled through defining a parameter called “r.” An increase in “r” results in reduced model complexity while decreasing it may speed up training while potentially diminishing quality of adaptation results. Ideally, “r” should be set at its minimum possible value that achieves accurate adaptation results.

LoRA is most often employed for fine-tuning large language models, although its application to any neural network architecture is also possible. Transformer models may benefit greatly from using LoRA’s attention blocks; its deployment can increase model efficiency up to 10,000x while simultaneously decreasing CPU and GPU memory requirements.

This method’s key advantage lies in its ability to reduce model weights by an order-of-magnitude. This dramatically cuts training time and GPU memory requirements, making it suitable for many computing platforms. Furthermore, implementation is straightforward with only minor alterations necessary in model structure.

This method also facilitates faster fine-tuning by limiting the number of model weight updates necessary during adaptation. This is achieved by freezing pre-trained model weights and injecting low-rank updates directly into layers of a transformer transformer.